Here’s something that might sound like science fiction, but stay with us: the future of artificial intelligence might not be in some hyperscale data center in Texas or Virginia. It might be floating miles above your head. Crazy? Maybe. But when you look at the math—and more importantly, the physics—it starts to make a whole lot of sense.

It is time we all take a step back and see what is actually happening on the ground. AI is hungry. In fact, AI is starving for energy. The International Energy Agency projects that electricity demand from data centers could double between 2022 and 2026, potentially gobbling up over 20% of global energy by 2030. Think about that for a second. One-fifth of all the electricity on Earth, just to power the machines that let you ask ChatGPT for dinner recipes and have Gemini argue with you about whether a hot dog is a sandwich. And energy is just the start of our problems.

Elon Musk just confirmed the most INSANE IPO in history.

— Ricardo (@Ric_RTP) December 14, 2025

SpaceX is going public in 2026.

$1.5 TRILLION valuation. Raising $30+ billion.

That's the biggest IPO ever made. Beating Saudi Aramco's $29 billion record from 2019.

But here's what everyone's missing:

This isn't about… pic.twitter.com/l0WXQJrzby

Elon Musk: Why a 1 Terawatt AI is impossible on Earth??

— X Freeze (@XFreeze) November 19, 2025

"My estimate is that the cost-effectiveness of AI in space will be overwhelmingly better than AI on the ground. So, long before you exhaust potential energy sources on Earth, meaning perhaps in the four or five-year… pic.twitter.com/gwCl6dkD0I

The Heat is Real

Ever stood next to a gaming PC running at full tilt? Now multiply that by about a million. High-density AI clusters—the kind running the latest large language models—generate five times more heat than conventional servers. These things are basically space heaters that occasionally do math. To keep all that silicon from melting into expensive puddles, data centers are using millions of gallons of water every single day. In an era where water scarcity is becoming a genuine crisis in many parts of the world, that’s… not great. We’re literally draining aquifers to train AI models.

Running Out of Room

Then there’s the land problem. These facilities aren’t exactly compact. Hyperscale data centers need massive footprints, and communities are increasingly saying “not in my backyard” to proposals for new ones. Some projects are facing years-long delays just waiting for grid connections. Others can’t find suitable locations at all. So we’ve got an energy crisis, a water crisis, and a real estate crisis—all converging on an industry that’s supposed to be the backbone of our technological future. What’s the solution? Look Up

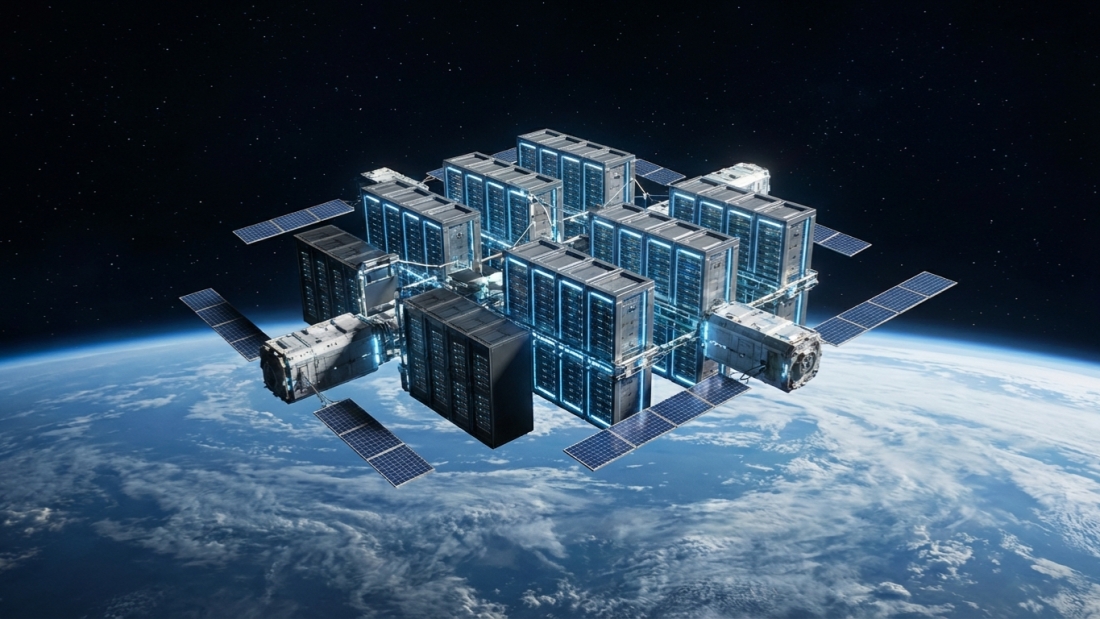

Here’s where it gets interesting. Space, as it turns out, solves basically all of these problems.

Power? In the right orbit, solar panels can catch sunlight nearly 24/7. No clouds, no weather, no nighttime (well, barely any). You get consistent, abundant, free energy beaming down from a fusion reactor 93 million miles away. That’s about as renewable as it gets.

Cooling? This is the really elegant part. Space is cold. Like, really cold. The vacuum of space acts as an infinite heat sink. You don’t need millions of gallons of water or massive HVAC systems. You just… let the heat radiate away. It’s passive cooling on a cosmic scale.

Space? I mean, come on. It’s literally called space. We’re talking about near-unlimited room for expansion. No zoning boards, no NIMBYs, no competing land uses. Just endless vacuum waiting to be filled with TPUs.

The Big Players Are Already Making Moves

If you’re thinking this is all theoretical—some far-off possibility being kicked around in academic papers—think again. The biggest names in tech are putting serious money behind this idea.

Google’s Project Suncatcher

Google has officially gone full moonshot with Project Suncatcher. The plan? Deploy constellations of solar-powered satellites loaded with Google’s custom Tensor Processing Units, all connected by high-bandwidth laser links forming a distributed supercomputer in orbit.

They’re not just drawing up blueprints either—a prototype mission in partnership with Planet is set for early 2027 to test the core technologies. Google is actively working on radiation-hardening their TPUs and figuring out how to control tightly clustered satellite formations. When Sundar Pichai says “our TPUs are headed to space,” you know things are getting real.

SpaceX: Elon’s Orbital Ambitions

Of course Elon Musk is involved. How could he not be? SpaceX is planning to turn future generations of Starlink satellites into full-blown orbital data centers. The next-generation Starlink V3 satellites will be significantly larger and more powerful than their predecessors, equipped with processors and inter-satellite laser links to create a computing mesh network in space.

The timing here isn’t coincidental. Musk’s AI company xAI needs enormous computational resources to train models like Grok. Building that infrastructure in space, using SpaceX’s own rockets, is a very Musk way of solving the problem. Reports suggest SpaceX is even considering a massive IPO—potentially valued at $1.5 trillion—partly to fund this orbital computing vision.

Amazon’s Project Kuiper

Not to be outdone, Jeff Bezos has his own satellite play with Project Kuiper (recently rebranded Amazon Leo). While primarily positioned as a broadband internet constellation, the deeper play is integration with AWS. Kuiper will provide enterprise and government clients secure, direct connectivity to Amazon’s cloud from anywhere on Earth—no public internet required.

Bezos himself has publicly discussed how space-based data centers could benefit from natural cooling and more effective solar power within the next 10 to 20 years. When the guy who built AWS starts talking about orbital computing, it’s probably time to pay attention.

OpenAI’s Stargate: The Scale of What’s Coming

Here’s where we really understand why space might be necessary. OpenAI’s Stargate initiative—a joint venture with SoftBank and Oracle—is a $100 billion to $500 billion plan to build a nationwide network of AI data centers. We’re talking about nearly 7 gigawatts of capacity across sites in Texas, Ohio, Wisconsin, and New Mexico.

Now, Stargate is a terrestrial project. But its sheer scale illustrates exactly why companies are being forced to think beyond Earth. When you need half a trillion dollars just to build enough infrastructure to pursue Artificial General Intelligence, you start running into the hard limits of what our planet can actually provide.

The Real Question

So is this actually going to happen? Will we really be running GPT-7 or whatever comes next on satellites orbiting 400 miles overhead? The challenges are real. Radiation in space fries electronics. Latency could be an issue for certain applications. Launching and maintaining orbital infrastructure isn’t exactly cheap (yet). There’s debris to worry about. And we still need to figure out how to build and repair these things at scale.

But here’s the thing: every major tech company is working on this. Google, Amazon, SpaceX—these aren’t small players making wild bets. They’re the companies that built the modern internet infrastructure we have today. When all of them independently conclude that space is the answer to AI’s scaling problem, that tells you something. The physics don’t lie. Earth has limits—in energy, in cooling capacity, in available land. Space doesn’t. And as AI models continue to grow exponentially, demanding ever more compute, the gap between what we need and what Earth can provide is only going to widen. Maybe the real question isn’t whether AI moves to space. Maybe it’s whether we can get there fast enough.

Leave A Comment