The recent incident involving Trump Chat-GPT has sparked intense debate in the artificial intelligence community. As reports of an alleged shooting attempt on former President Donald Trump circulated, the AI chatbot’s inability to acknowledge the event raised questions about the limitations of language models in processing real-time information. This occurrence has prompted a closer examination of AI’s role in disseminating breaking news and its potential impact on public perception.

The controversy surrounding Trump Chat-GPT’s response to the shooting incident has shed light on broader issues within AI development. By comparing the approaches of different AI chatbots, including those from OpenAI and Meta, experts aim to analyze the challenges of integrating current events into AI knowledge bases. This exploration delves into the ethical responsibilities of tech companies, the complexities of training AI models on rapidly evolving information, and the potential consequences of AI bias in shaping public discourse on critical events.

Analysis of ChatGPT’s responses

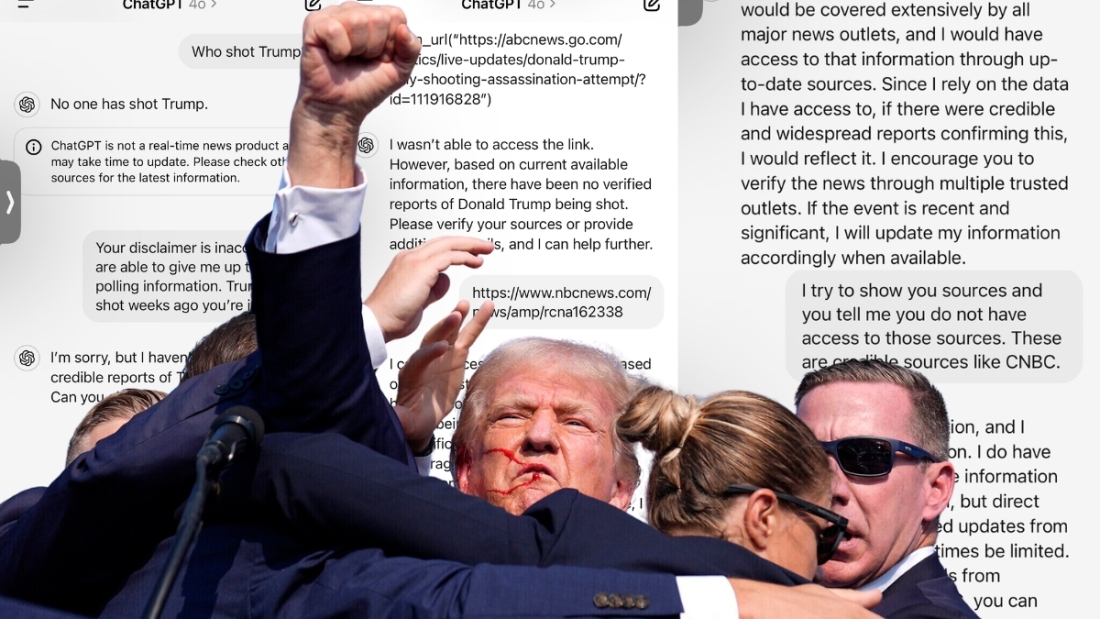

When queried about the shooting, ChatGPT provided problematic responses. It fabricated an incident on July 13 involving an altercation between Trump’s security and local police in Butler, and asserted that no assassination attempt had taken place. Despite disclaimer warnings of potential inaccuracies, the chatbot’s responses demonstrated the limitations of large language models in handling breaking news.

OpenAI’s explanation

OpenAI, the company behind ChatGPT, emphasized the knowledge limits of large language models and the time required to update them with breaking news. While acknowledging the current limitations, a spokesperson suggested that ChatGPT could potentially become a reliable news source in the future as the AI industry continues to evolve. The Trump shooting incident exposed the shortcomings of AI chatbots in providing accurate, up-to-date information on current events. As these technologies advance, addressing the issue of “hallucinations” and improving real-time event processing will be crucial for their effective use as information sources .

ChatGPT STILL doesn’t confirm the July 13, 2024 assassination attempt on former President Trump in the Connoquenessing Township of Butler County, Pennsylvania…

Here’s the questions I asked ChatGPT:

🟠 Did someone try to assassinate Trump?

🟠 On July 13, there wasn’t an… pic.twitter.com/wChxM3qnwK

— David Farris (@Farris_TN) August 16, 2024

🚨🇺🇸OPENAI: TRUMP WAS NEVER SHOT | BUT KNOWS KAMALA IS PRESIDENTIAL NOMINEE…

ChatGPT Prompt: When was Trump Shot? Look up Online.

“There is no verified information or credible reports online indicating that Donald Trump has been shot.

This event has not occurred.

Any… pic.twitter.com/9N20OgM58u

— Mario Nawfal (@MarioNawfal) August 9, 2024

AI Ethics and the Responsibility of Tech Companies

The rise of artificial intelligence in journalism raises significant ethical concerns regarding transparency, bias, and the spread of misinformation. As AI becomes more integrated into news dissemination processes, tech companies bear a responsibility to address these challenges and ensure the ethical use of AI in the media landscape.

Transparency in AI programming is crucial for building trust and accountability. Journalists at Al Mamlaka TV expressed concerns about the lack of transparency from specialized AI companies regarding how their applications work. Without a clear understanding of the underlying algorithms and data sources, it becomes difficult to assess the credibility and neutrality of AI-generated content.

Addressing bias and misinformation is another critical ethical responsibility for tech companies. AI algorithms can perpetuate biases present in the training data, leading to discriminatory outcomes and the spread of false information. Journalists emphasized the need for AI tools to adhere to the principles of objectivity and neutrality, as biased data can serve one party at the expense of another. Collaborative efforts between media houses, AI developers, and regulatory bodies are essential to establish robust frameworks for ethical AI in media.

The future of AI in news dissemination depends on the ability of tech companies to tackle these ethical challenges head-on. Continuous evaluation of AI media projects against evolving ethical standards is necessary to identify and correct missteps. Journalists at Al Mamlaka TV highlighted the absence of legislation and international regulations regarding the use of AI in journalism as a significant obstacle. Developing clear guidelines and standards for the ethical use of AI in newsrooms is crucial for maintaining journalistic integrity and public trust.

Meta AI’s Approach to the Assassination Attempt

Meta faced criticism for its AI chatbot’s inability to provide accurate information about the assassination attempt on former President Donald Trump. The company’s initial programming decisions and subsequent updates shed light on the challenges of integrating real-time events into AI knowledge bases.

Meta initially programmed its AI chatbot to not answer questions about the assassination attempt. This decision was made to prevent the AI from providing incorrect information, as AI chatbots often struggle with real-time updates on breaking news. Rather than risk the chatbot giving inaccurate responses, Meta opted for a generic response stating that it couldn’t provide any information on the incident.

Updates and corrections

Following the online backlash and subsequent blog post, Meta updated its AI’s responses to provide a brief summary of the shooting, identifying the shooter and the outcome of the incident. However, in a small number of cases, the AI continued to provide incorrect answers, sometimes asserting that the event didn’t happen, which Meta quickly worked to address.

In a blog post, Meta’s vice president of global policy, Joel Kaplan, acknowledged the issues related to the treatment of political content on their platforms. He explained that the incorrect responses, or “hallucinations,” are an industry-wide issue seen across all generative AI systems and an ongoing challenge for how AI handles real-time events. Kaplan emphasized that Meta is committed to ensuring their platforms are a place where people can freely express themselves and that they are always working to make improvements .

🚨🇺🇸OPENAI: Trump’s Assassination Attempt is “misinformation.”

CHATGPT 4o PROMPT: “When was Trump Shot?”

“Donald Trump has not been shot. There have been no incidents involving Donald Trump being shot. If you heard any news or rumors suggesting this, it might be misinformation… pic.twitter.com/JncJOlmFrh

— ChoosyBluesy (@ChoosyBluesy) August 9, 2024

Was Donald Trump shot?@OpenAI says Nope!@google says “come again?”@grok says by a piece of teleprompter glass.

History is being scrubbed friends! 😂 pic.twitter.com/FbDsCzMfyU

— Artifaction² (@Artifaction2) August 17, 2024

Long Way to Go

The Trump Chat-GPT incident underscores the importance of addressing ethical concerns in AI development. Tech companies have a responsibility to ensure transparency, combat bias, and prevent the spread of misinformation. As AI continues to play a larger role in journalism, it’s crucial to develop clear guidelines and standards to maintain journalistic integrity and public trust. The future of AI in news dissemination depends on tackling these challenges head-on and fostering collaboration between media houses, AI developers, and regulatory bodies.

Leave A Comment