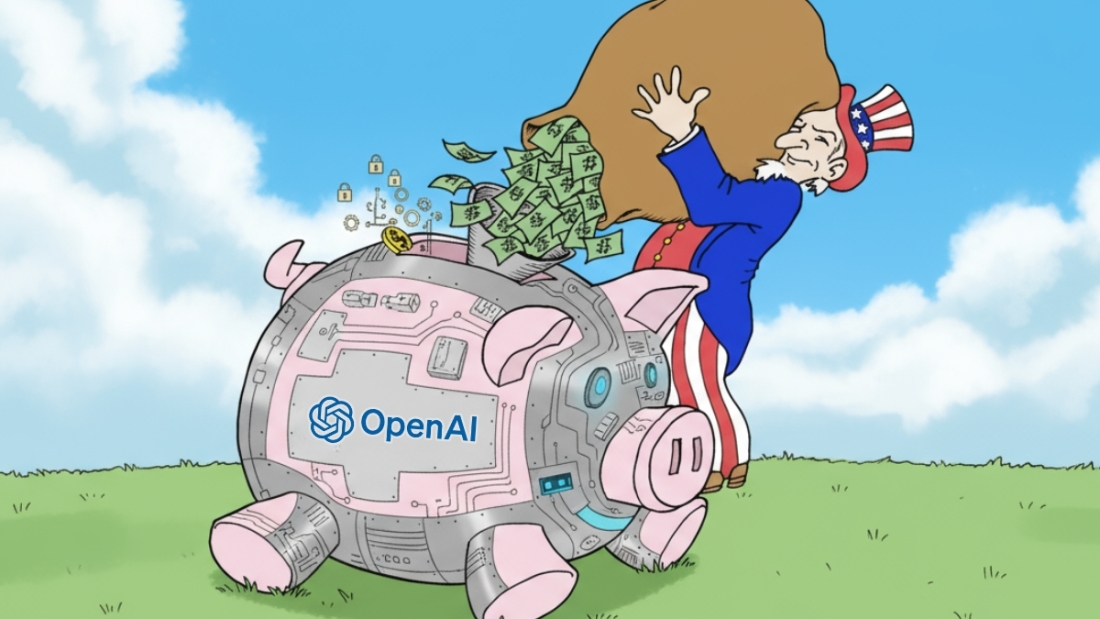

OpenAI Government Bailout discussions unfold behind closed doors while Sam Altman maintains public denials about seeking federal support. The contradiction reveals a sophisticated strategy to secure taxpayer funding without the political backlash of openly requesting a bailout. OpenAI’s financial situation has deteriorated rapidly, $5 billion in losses during 2024 alone. The first half of 2025 proved catastrophic with $13.5 billion in red ink, followed by another $12 billion hemorrhage in the most recent quarter. Altman’s public statements ring hollow against this backdrop of financial distress. He insists OpenAI neither has nor wants “government guarantees” for their data center operations. Yet sources suggest the company has engaged in promising discussions with the Trump White House about potential federal assistance. This carefully orchestrated approach seeks to position private sector failures as national security imperatives.

The arithmetic of artificial intelligence tells a sobering story. Bain & Company estimates the industry requires $2 trillion by 2030 to achieve profitability a figure that dwarfs most government spending programs. Success rates remain abysmal, with TechRepublic reporting that 85% of AI implementations fail outright. OpenAI now frames its infrastructure requirements alongside national manufacturing priorities, a strategic repositioning designed to transfer financial risk from Silicon Valley venture capitalists to American taxpayers. What emerges is a calculated campaign to normalize government intervention in private AI ventures. The precedent carries profound implications for how technological advancement intersects with public funding, raising fundamental questions about whether taxpayers should underwrite speculative ventures that private markets increasingly view with skepticism. So many are asking, how did we get here and where are we going?

The Great AI Build-out

Imagine a complex ecosystem where chips, cloud capacity, data centres, and models all flow like water. On one side you have deep-tech labs such as OpenAI that need vast compute; on the other, the suppliers of that compute—hardware vendors, cloud providers, and infrastructure builders. Now layer in investors and national governments, and the web becomes dense.

Some of the major linkages:

Microsoft committed to OpenAI with a multi-billion-dollar deal and secured exclusive access to large-scale GPU clusters on Azure.

Nvidia, the dominant GPU vendor, struck a strategic equity investment with OpenAI in return for supply of next-gen hardware.

Oracle entered the game, partnering with OpenAI and securing GPU infrastructure deals (via Nvidia) to host AI workloads at scale outside of Azure.

SoftBank made a bold financial bet on OpenAI, pivoting away from Nvidia to become a major investor in the AI model side of this ecosystem.

Meta Platforms is simultaneously building its own internal superclusters, considering custom chips and GPU deals with AMD and Nvidia.

AMD is positioning itself as a potential alternative to Nvidia in AI hardware, with support from Microsoft among others.

CoreWeave, a GPU cloud-native provider, is stepping in to supply AI compute capacity to big buyers (including OpenAI) and is backed by Nvidia.

Broadcom was tapped by OpenAI to help design its first in-house AI chip, signalling that the hardware model is morphing.

Collectively, these companies form a constellation of partnerships and deals that map out the frontier of AI infrastructure. What stands out isn’t just that the money is big, it’s that everything is being tied together: chips → cloud → models → applications → users. And that linkage is what gives this wave its magnitude.

SoftBank just sold its Nvidia position to invest in the company that Nvidia invested in (which also buys chips from Nvidia) pic.twitter.com/Ix6ngp8AfT

— litquidity (@litcapital) November 11, 2025

The Deals That Built the Modern AI Stack

OpenAI ↔ Microsoft

Microsoft invested over $10 billion in OpenAI and obtained exclusive Azure deployment rights. Azure built dedicated GPU supercomputers for OpenAI, with clusters of tens of thousands of Nvidia GPUs. Microsoft integrates OpenAI models into Bing, Office, and GitHub; OpenAI gains compute and global reach.

OpenAI ↔ Nvidia

Nvidia committed up to $100 billion in investment and GPU supply to OpenAI over multiple years, ensuring preferential access to its newest hardware. Nvidia secures a premier AI customer; OpenAI locks in its supply chain.

OpenAI ↔ Oracle + SoftBank (Project “Stargate”)

A joint initiative worth around $300 billion over five years aims to build as much as 10 GW of AI datacentre capacity. Oracle will develop the majority, with SoftBank adding energy and capital resources. The move diversifies OpenAI beyond Azure and cements Oracle’s relevance in cloud AI.

OpenAI ↔ CoreWeave

An $11.9 billion GPU cloud contract grants OpenAI massive external compute capacity. CoreWeave gains prominence as a non-hyperscale supplier critical to AI infrastructure diversification.

Microsoft ↔ AMD

Microsoft backs AMD’s MI300X chip development, giving it a second-source alternative to Nvidia. AMD gains marquee validation and funding.

Meta Platforms ↔ Nvidia (and AMD)

Meta is deploying 100,000+ Nvidia H100s while experimenting with AMD GPUs and in-house MTIA chips, pushing open-hardware standards and reducing vendor lock-in.

The JPMorgan CapEx Thesis

JPMorgan Chase estimates that to justify the current AI infrastructure boom, the industry must generate roughly $650 billion in annual revenue by 2030, the equivalent of $34.72 per month from every iPhone user worldwide.

Their logic is simple: the AI economy has entered a capital cycle of historic proportions. Training foundation models, constructing data centres, and manufacturing advanced chips already require hundreds of billions annually. The projection assumes AI transitions from experimental tools to global productivity infrastructure, much like electricity or the internet.

If monetisation lags, or if enterprise adoption remains slow, the returns collapse. That risk defines the razor’s edge between a justified technological transformation and an over-capitalised bubble.

👉Softbank sells entire Nvidia position.

— Gary Marcus (@GaryMarcus) November 11, 2025

👉Oracle debt downgraded.

👉Meta financing games revealed.

👉OpenAI CEO @sama couldn’t explain how company would meet its $1.4 T obligations.

👉Coreweave drops 20% in a week.

You do the math. pic.twitter.com/hYuRjkKICl

Inside the Data Centre Arms Race

The new oil fields of this era aren’t underground, they’re on the grid.

Modern AI data centres draw staggering power. Single campuses approach 1 GW loads, with rack densities exceeding 40 kW and advanced liquid-cooling systems.

Key dynamics:

Hardware dominance: Nvidia controls 80 % of training GPUs; AMD and custom chips (OpenAI/Broadcom, Microsoft Athena, Meta MTIA) are rising challengers.

Deployment model: Prefabricated pods, high-speed InfiniBand networking, and modular designs speed rollout.

Energy sourcing: Hyperscalers pursue long-term PPAs and renewable projects; some are reviving nuclear capacity to secure baseload power.

Physical risk: Overcapacity and grid shortages threaten delays if infrastructure completes before adequate energy supply exists.

The infrastructure is real and vast but whether it can be powered and profitably used is the defining question.

Oracle Is First AI Domino To Drop After Barclays Downgrades Its Debt To Sell https://t.co/BZ7vCxPUx6

— zerohedge (@zerohedge) November 11, 2025

Bubble or Breakthrough?

The Breakthrough Case

AI has become a general-purpose technology touching every sector from engineering and construction to finance and healthcare. If adoption continues and costs fall through custom chips and better efficiency, today’s build-out may prove prescient. Early movers gain moats; capacity translates to market dominance.

The Bubble Case

Valuations and spending are detached from present revenues. If AI monetisation remains weak or enterprise adoption slows, infrastructure built today could sit idle for years mirroring the fiber and dot-com overbuilds of the early 2000s.

CoreWeave $CRWV default insurance pricing: https://t.co/gxqSQh3M2I pic.twitter.com/kJPlAgxQdN

— Consensus Media (@ConsensusGurus) November 11, 2025

The Energy Bottleneck — AI’s Hidden Constraint

Perhaps the most critical and under-examined risk is energy generation and grid capacity.

Every layer of the AI stack training clusters, cooling systems, inference networks depends on electricity. And the numbers are daunting: global data centre energy use is set to double by 2030, driven largely by AI workloads. The U.S. alone may see AI-centric demand rise thirty-fold by 2035.

The problem is speed. Building gigawatts of new generation capacity takes years permitting, transmission lines, transformers, and renewable integration cannot be deployed at the pace of Silicon Valley’s roadmaps. As a result, the compute expansion is running ahead of the power supply that fuels it.

Why this matters:

Delays and idle capital: If grids can’t connect new campuses in time, billions in hardware will sit unused.

Rising power costs: Utilities already signal rate hikes to fund grid upgrades, cutting into ROI.

Renewable shortfalls: Net-zero commitments conflict with limited clean energy availability; operators may resort to fossil sources or face public backlash.

Regulatory pushback: Regions like Ireland and Virginia are restricting new data centers until grid capacity improves.

This energy gap is the potential Achilles’ heel of the AI boom. Without a massive and coordinated build-out of generation and transmission, the $650 billion AI economy will struggle to materialise on schedule. Compute is scaling like software, but power scales like infrastructure and that mismatch could hamstring the entire industry.

Understating depreciation by extending useful life of assets artificially boosts earnings -one of the more common frauds of the modern era.

— Cassandra Unchained (@michaeljburry) November 10, 2025

Massively ramping capex through purchase of Nvidia chips/servers on a 2-3 yr product cycle should not result in the extension of useful… pic.twitter.com/h0QkktMeUB

Why the Bears Are Right, But the Bulls Will Win

The skeptics aren’t wrong. The bears see the cracks forming beneath the surface of the AI buildout: unsustainable capital expenditure, lagging monetization, rising energy costs, and a supply chain stretched to the breaking point. They point to history the dot-com bust, the telecom overbuild, the railroad mania and remind us that every technological revolution burns too hot before it stabilizes. They’re right to say not every company will survive, not every data center will find demand, and not every trillion-dollar valuation will be justified.

But this time, the bulls will still win.

Because this isn’t just a corporate bet it’s a national one. The AI economy has crossed the threshold from speculation to strategic imperative. Compute is now a pillar of national security, economic stability, and global influence. The U.S. cannot afford for AI to fail any more than it could afford to lose the space race. The capital markets understand this — and so do policymakers, defense strategists, and institutional investors who quietly align their portfolios with geopolitical necessity.

The bears are right about the distortions too especially in the numbers. Across the hyperscalers; Meta, Google, Microsoft, Amazon, and Oracle a quiet accounting maneuver has artificially smoothed earnings during the AI investment surge. Each company has extended the “useful life” of their compute and networking hardware from roughly three years to five or six. On paper, that makes these record-breaking GPU and server purchases appear efficient; in reality, it just delays the expense.

Since AI chips age in 24–36 months, this maneuver is effectively an earnings booster disguised as efficiency. Analysts estimate that from 2026 to 2028, the largest players could understate depreciation by more than $170 billion, overstating profits by up to 25 percent. It isn’t fraud it’s financial oxygen, keeping the optics of profitability alive long enough for the infrastructure to take root.

Like the DotCom Bubble?

It’s a page torn straight from the telecom boom playbook. Two decades ago, firms extended the lifespan of fiber assets to hide the cost of their overbuild. Eventually, the write-offs came, but the networks remained and the world was permanently wired. The same outcome is likely here: even if depreciation resets and valuations compress, the compute layer will remain baked into the U.S. industrial base a sunk cost that becomes a national asset.

And that’s the paradox. Even if the bears are right about over-investment, inefficiency, and accounting distortion, the bulls will win because the system is built to protect the bet. Over $6 trillion sits in U.S. money-market accounts the most liquid capital base in history. Every market correction in AI will be met with absorption. Liquidity, institutional capital, and strategic alignment form a de facto backstop.

If valuations fall, write-downs will be absorbed. If firms stumble under the weight of their spending, debt can be refinanced cheaply. And if quarterly profits disappoint, the strategic mission, U.S. compute dominance, will override the optics. This is an industrial campaign disguised as a market trend, and every layer of the system private capital, policy, and monetary infrastructure is built to ensure it endures.

So yes, the bears are right: the numbers are stretched, the spending is enormous, and the risks are real. But the bulls will win because the AI buildout is now a matter of national security. Once an industry becomes too strategic to fail, the question isn’t whether it will survive, it’s what form its survival will take.

JPMorgan says the next five years could require $5T to $7T in total investment. About $1.5T may come from investment-grade bonds, plus $150B from leveraged finance and up to $40B a year in data-center securitizations.

— Wall St Engine (@wallstengine) November 10, 2025

Even then, there’s still roughly a $1.4T funding gap likely… pic.twitter.com/FouLcm11l8

Where does this go?

Electrons are the new oil, but electricity is its carbon. Without massive energy deployment, AI’s ambition is limited by physics and policy.

The AI race is beginning to resemble the Cold War space race only this time, the battleground is silicon and power. The United States and China are locked in a technological sprint, each pouring unprecedented capital into compute, chips, and energy infrastructure. But as history reminds us, it wasn’t the rockets that decided the Cold War it was the balance sheet. The Soviet Union collapsed under the financial weight of sustaining its technological ambitions.

If AI infrastructure continues to scale faster than its economic return, the same dynamic could unfold in miniature: overextension, then consolidation. Yet unlike the USSR, the U.S. today possesses a unique financial weapon a deep, liquid capital market with trillions of dollars sitting on the sidelines. Between money market funds, corporate cash reserves, and institutional liquidity, every selloff or correction in AI is likely to be met with absorption. The system itself acts as a stabilizer, ensuring the core of the AI ecosystem remains intact even during turbulence.

And if private markets can’t shoulder it all, the public sector will. Whether through subsidies, guarantees, or strategic bailouts, the U.S. government will not allow the compute race to be lost. The stakes are too high. AI is no longer a corporate arms race it’s a national security priority. The future of digital dominance, military capability, and economic power all hinge on compute supremacy.

So, where does this go?

Whether this era ushers in a sustainable transformation or a capital overbuild depends on how quickly we bridge the gap between chips and power and how long nations can afford to keep the race going.

The trillion-dollar question isn’t whether AI will change everything. It’s whether we can keep the lights on and the capital flowing long enough to win the race.

Leave A Comment